Enjoying life and nature is something I love to do. And I rather do that than spend time on my phone talking about how I enjoyed what I do with my life or how my cycling went. But I love my phone, I just don’t want to waste more time on it than needed. Life and nature outweighs fiddling around on the phone ‘to get things done’. #zen.

A discussion I frequently have when I read an article, read up on a topic, get provoked to think about something tech, etc. Where I go: “yeah exactly, wouldn’t it be cool if this could do that?”, and that’s what this blog post is about.

tl;dr Every so often I ponder about why we are not yet enjoying full convenience yet, because wouldn’t it be great if some service or product would do a particular handy little thing?

Be it the full desktop Operating System (MacOS), or the mobile OS like Android or iOS, there are so many little things that are done so smartly, while there’s still so much left to be desired. I have discussions with my friends where we shake our head as to “what were these developers thinking”, as well as being astounded that technology is already this far. Maybe I will give a few examples.

It is just fantastic that the screen isn’t like Microsoft Word, too small font showing only a portion of your document, with margins, toolbars, and whatever else filling up about 55% of your workspace (monitor). How people get anything done is beyond me. It’s why I don’t pay for it, it’s why I invest in iA Writer which is a full screen, big easy fonts, and the clutter hidden away. And iOS provokes this. You have content, and the menu is a slide away. The options are context-aware in the virtual keyboard, as well as a swipe away to be disclosed for direct actions. It’s innovating, intuitive, direct, and useful. Sure, a tiny learning curve, because the button isn’t there 24/7 in your face. But in my humble opinion not more of a learning curve than the time it takes to go through hundreds of buttons to find that one you know has to be somewhere. And what you learn in one program usually applies to the rest. I love it. That said, it’s beyond me why swiping on an inbox email in Apple’s Mail app doesn’t include Trash.

On the other hand it’s crazy to think that a lot of convenience that we desire is just a technical limitation. Or something that’s possible but simply decided not to be included when weighted against battery life. Or maybe the competition has a patent or some trademark. Who knows, but, wouldn’t it be cool if …

After reading up on context-stacking and linguistically derivation and what not, I ended up in a ten minute chat brain-storming some pros and cons if say iOS would include this as a system wide API. Personally, I find it hard to think that a future iOS version won’t include it. Anyway, this is what this blog is about. Me sharing this thought / discussion, because I’d love to read back in a few years and see if we all went into that direction or whatever.

Deriving what we’re saying in programs to a computer, a person, at home, at work, etc. It all has context. The device knows who we are, who we talk to, where we are, when we are, etc. And I think it would be amazing if it could take that, derive what’s going on – and help me make the next step in a predictive manner. Learn as time goes by, and adapt as things change. With enough controlling options under Settings to include, or exclude certain events, situations, privacy sensitive stuff, etc. I will start with an example of what we have ‘right now’, and I think is very smart, but kind of ‘passive’, and then share an example of what I think would be pretty great if it is included as a pretty complex feature that looks so simple and helps us daily.

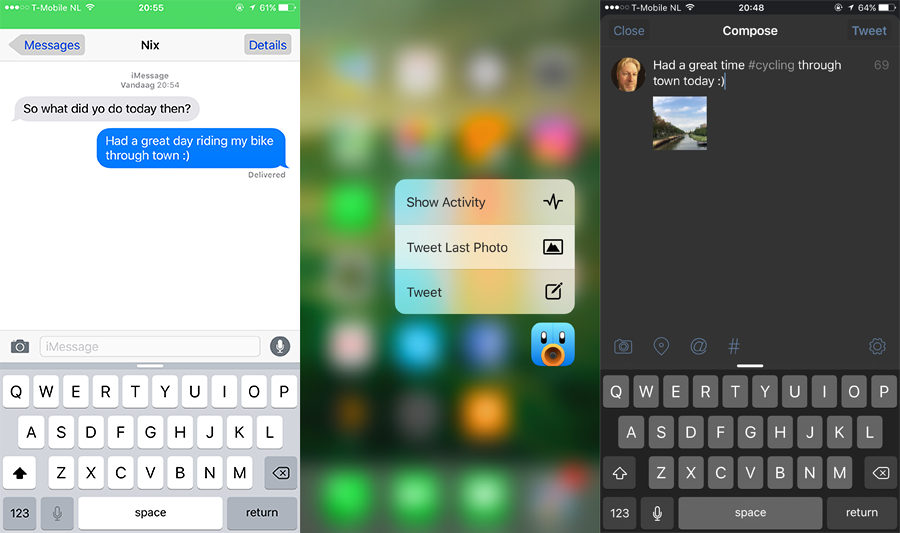

Anyway, right now. If I take a picture and my next step is perhaps logical: I want to Instagram, Tweet, Facebook, Flickr the picture into the social domain. I can open Facebook for example and the post has my latest pictures listed. I can select it, post it, done.. That’s what we think is all normal. Okay, let’s up the ante. Wouldn’t it be nice if we can skip the step of going into Facebook, click Status Update, selecting the Picture and writing our msg and reviewing it, and clicking Post, etc? Tweetbot for example is something I use a lot to tweet things I am doing – short micro blogging of my life – .. Okay, after I take the picture I 3D-Touch (touching with force) on the Tweetbot icon, and right there it says “New tweet with Picture..”, it opens the app for me, in the right spot, that picture I just took as attachment, and I can focus on my tweet. Done in a few seconds. Convenience to the max.

To the max, really? No, not really. It missed a few things, and that’s what I had the chat about today with a friend. What my point of this blog post is. I think we’re not yet there. There is so much 64bit processing power in that device, with amazing battery life and so much co-processing stuff that’s being done. And the things we do are actually predictable and in context. We can go to the max, but we’re not there yet. Wouldn’t it be great if Apple for example would have a system wide context-API that lets apps help use (but for privacy reasons: not transmit back to the developer, advertiser) stack context, derive situations, predict situations, and assist?

When I am in a conversation with a friend and type: “You’re free tomorrow? Ok, chat with you then.” or “Just been out cycling today, how about you?” and I leave the app. Then this context-API thingy could have figured out what time it is now, when something in the things we said has or will happen. When, why, where, and with whom, or just me. It has my details, it has the details of who I speak with. It knows I am home, but that person has a different timezone, and is at work. It can derive this information, stack it up against plausible situations and predict what’s more likely going to happen next.

Maybe I want to make a note of this, or a calendar event to meet up. Maybe I want to tweet something about today or this chat. Maybe I have to book an Uber or plane ticket to meet up?

In my situation, I am at home, not at work, the other person seems to be free tomorrow, and the action is to chat then. I could imagine when I open Tweetbot that the new tweet has a temporary (auto-expire) draft or the message in grey until I type over it saying something like “Looking forward to chatting to my buddy @username tomorrow”, I can also imagine this isn’t something you might want to tweet. Hence the grey text or a draft, so it’s easy to pretend that’s not it and write something else.

But I can imagine that if I open up my Notes app, some Todo app, or a Calendar app that it might make (again, draft or temporary auto expire) event that’s populated with a reminder ‘UserX is free today, maybe poke for a chat at x pm?” (it can even take into account the timezone differences so we don’t poke the user at 4am at night while it’s 6pm over here).

Okay, another type of example where I think this sort of context aware computing is super handy: Learn about who I am, where I am, what I do, and adjust my system usage automatically.

A current example: I tell my phone when my sleeping hours are in Night-Shift so when I go to sleep it automatically shifts from blue to yellowish. But this isn’t to the max. Let’s think about to the max (well, within the realm of this blog post).

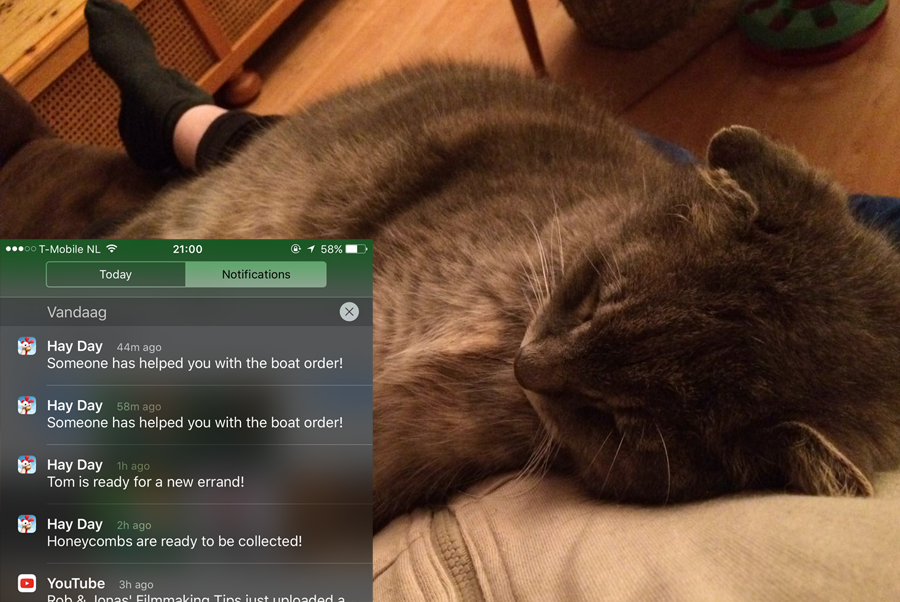

I think it is so illogical to get Hay Day notifications at 5am when my phone is flat on a surface, in a charger, on stand-by, and not in use for a while. Especially when it’s about the same time every day and we can derive it’s either work hours or sleeping hours. Either way: Stop buzzing. Collect this information and group notify me quietly when I make the phone active, or something along those lines. I rather stay asleep at night knowing I don’t have to dumb-down my phone with settings and flipping buttons to mute etc. And I rather wake up and get a notification then saying there’s been activity on a game or whatever.

Heck, this way contacts that are marked as a favourite can still call or notify me. Which is more logical to wake up to. An important business poke (not relevant to me by the way), or a family member in distress or whatever. You want to get those, not the other illogical stuff.

Recognise the phone’s flat, not in use for x time, every y period of the day, at geo location home/work, etc. And adapt the frequency of notifications, or increase grouping of notifications, or simply stop making buzzing or bleeping sounds and only give mute-notifications that don’t light up the screen.

Wouldn’t that be a smarter way to use technology to our benefit, improve the convenience without compromising usability? In this situation I can imagine this might actually improve battery life as well. But maybe that’s debatable.

Think about it. If the balance is respectable to the consumer and we’re not turned into the product. And like I mentioned before, don’t force this on us, and don’t return the computed data to the developer and advertisers, and it’s something that benefits us and values the phone in our pocket so much more.

If that’s the case, I wouldn’t mind having a chat with a friend saying I had a great time today riding my bike through town. And having the system recognise I’ve edited and trashed a few pictures with the meta data of this ‘town’ from ‘today’, for ‘the pictures I took’, and make a plausible prediction that when I open Facebook or ask Hey Siri, can you post today’s cycling event? That there is a greyish Status Update pending with something in natural language saying: Some pictures from cycling through Purmerend today. (And a smiley face because I said in the iMessage earlier that I had a great time).

To prevent repetitive lazy posting, we at the least could customise the message, update the pictures, or instead of a smiley it could actually quote you or use a synonym, etc.

And if I don’t click into that greyish message, it lets me post as if its a completely new post. And if I don’t open Facebook for an amount of time that suggested pending update just expires.

Okay, last example. Business. Imagine having a Slack conversation or a phone call or whatever with someone and you mention that you should have time this month to book for the Google event.

The ‘personal assistant’ could derive it’s you and that you’re talking during business hours at the geo location of work to someone who’s a contact from ‘work’ (and not family, friends), in another country, and that there’s a Google event this month. Your Calendar shows you have no pressing matters that week, and the Hotels shows you there are rooms nearby that are of a certain standard, and the company budget for this whole event covers it. When you then ask Siri to come up with a suggestion you should get a visual presentation of some options to book a plane ticket (because it’s in another country). Ask if you have to meet up with your friend prior to the event, same hotel? Offer to schedule Uber rides or alike, and lets you place the order. So the tickets are booked, hotel rooms reserved, rides scheduled, meetings planned, and reminders set to pack, leave to airport, inform others with your company via email for all I care. And all you have to do is walk to checkin to scan your boarding pass from the Wallet app.

All from just learning who you are, where you are, when you are, and what happened, is happening, and understanding context and deriving situations from it and predicting what you might want to do next based on that. I think it would be pretty cool if it could do that.

3D-Touch on Tweetbot > Tweet latest picture > Is a great first step if you ask me. And I think a sign that we’re entering the era of apps where we go beyond the app. Use the app without going into the app.

Anyway, I can think up hundreds of examples, but I hope I’ve already made my point. Let’s finish with a quick example that I think would be pretty handy for the busy mom (bonus example haha).

“Hey Janice, I’d love to go see a movie Friday, but I have to get a sitter first” (switches to contact) ‘Pre filled: Hey (regular sitter), you available Friday evening? :)’ And mom only has to press Enter. And since a follow up action was done, it slides down in the top ‘Want to see which movies play Friday night?’ Mom’s already looking forward to her break. Haha, sorry, I just had to.